mutable struct VariationalLearner

p::Float64 # prob. of using G1

gamma::Float64 # learning rate

P1::Float64 # prob. of L1 \ L2

P2::Float64 # prob. of L2 \ L1

endSpeaking and listening

lecture

Update 7 May 2024

Fixed the buggy learn! function. Also added the missing link to the homework.

Plan

- Last week, we ran out of time

- Better go slowly and build a solid foundation rather than try to cover as much ground as possible

- Hence, today:

- Finish last week’s material

- Introduce a little bit of new material: implementing interactions between variational learners

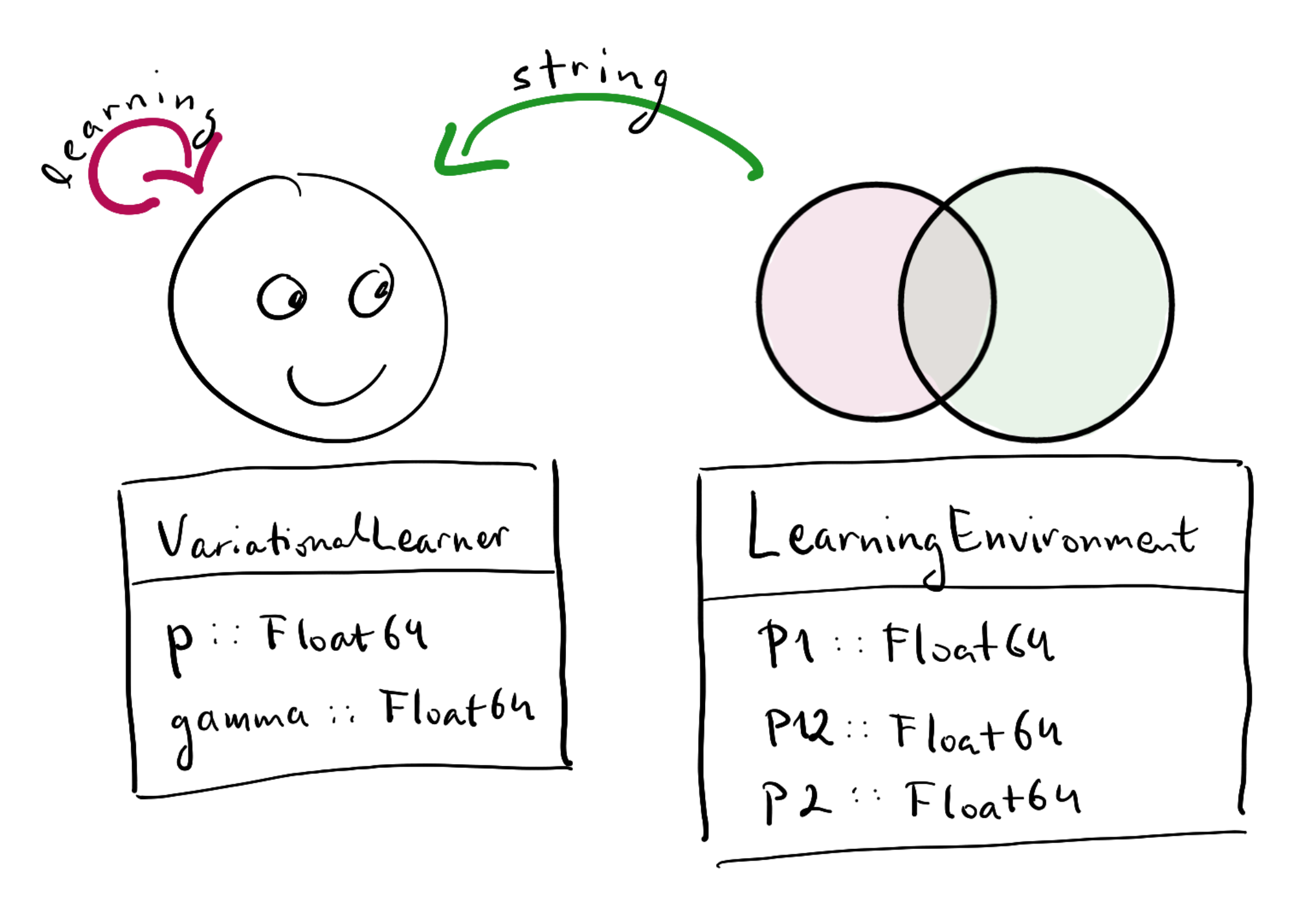

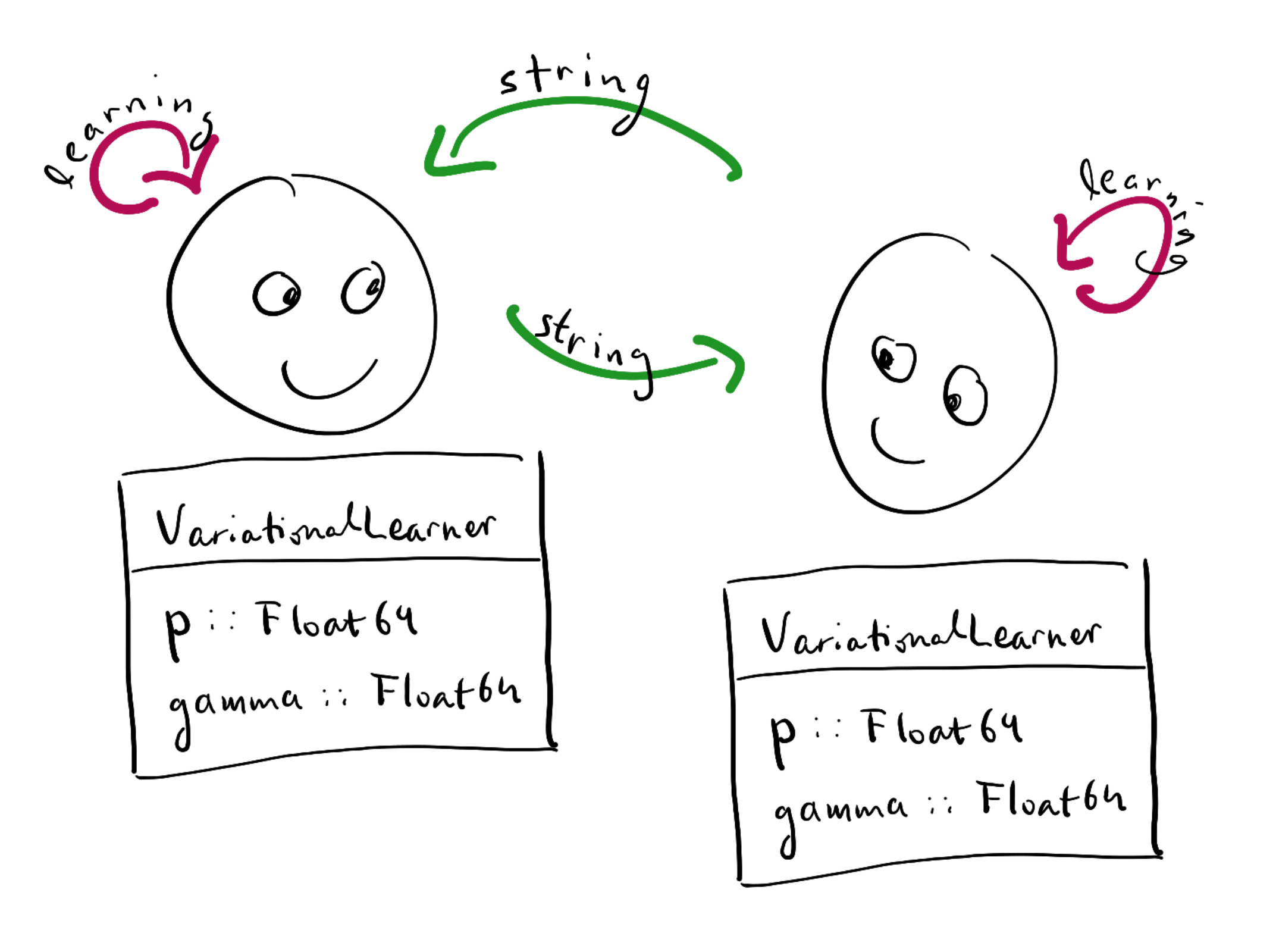

Dropping the environment

- So far, we’ve been working with the abstraction of a

LearningEnvironment:

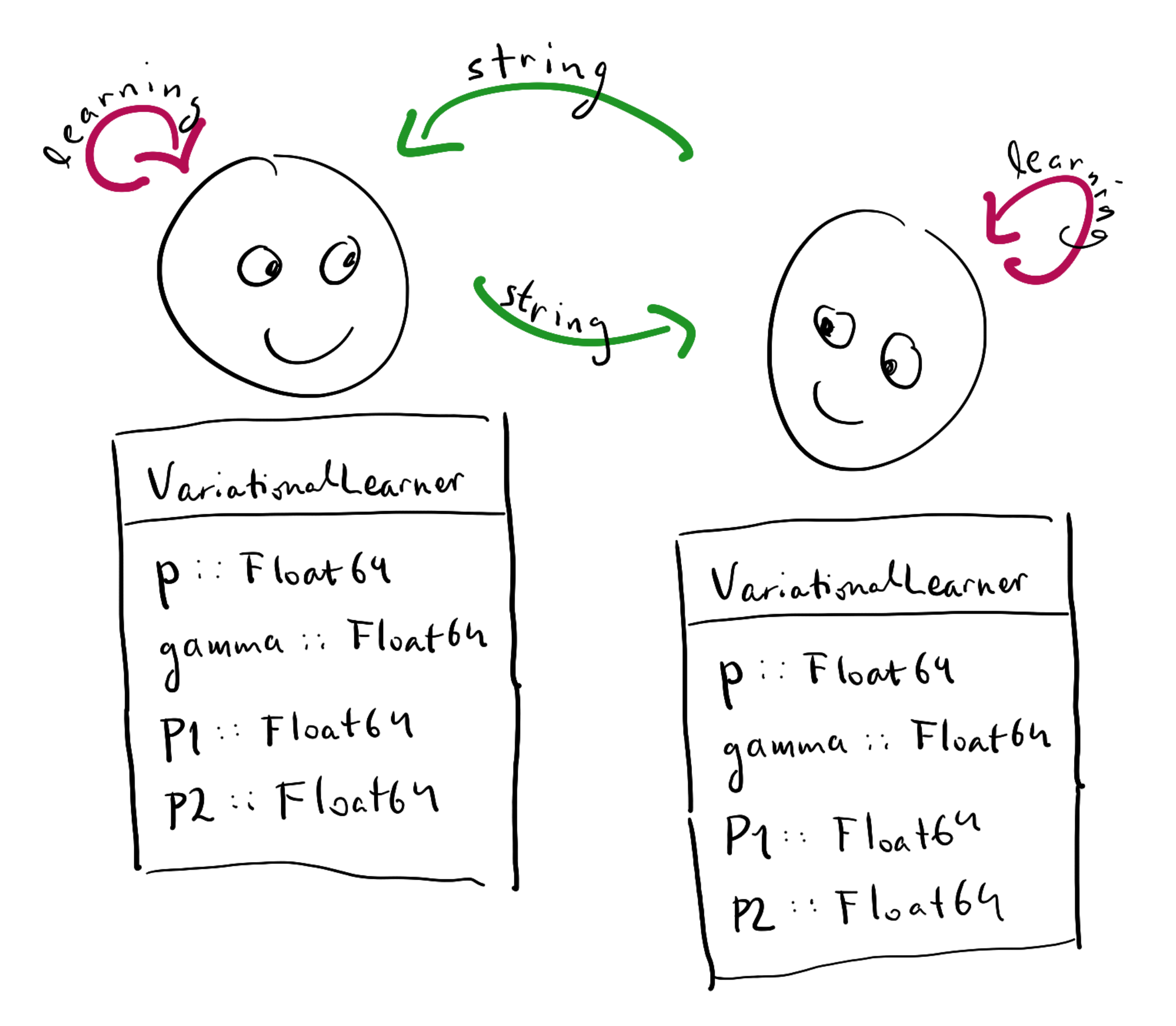

- We will now drop this and have two

VariationalLearners interacting:

- The probabilities

P1andP2now need to be represented inside the learner:

- Hence we define:

Exercise

Write three functions:

speak(x::VariationalLearner): takes a variational learner as argument and returns a string uttered by the learnerlearn!(x::VariationalLearner, s::String): makes variational learnerxlearn from stringsinteract!(x::VariationalLearner, y::VariationalLearner): makesxutter a string andylearn from that string

Answer (speak)

using StatsBase

function speak(x::VariationalLearner)

g = sample(["G1", "G2"], Weights([x.p, 1 - x.p]))

if g == "G1"

return sample(["S1", "S12"], Weights([x.P1, 1 - x.P1]))

else

return sample(["S2", "S12"], Weights([x.P2, 1 - x.P2]))

end

endspeak (generic function with 1 method)

Answer (learn!)

function learn!(x::VariationalLearner, s::String)

g = sample(["G1", "G2"], Weights([x.p, 1 - x.p]))

if g == "G1" && s != "S2"

x.p = x.p + x.gamma * (1 - x.p)

elseif g == "G1" && s == "S2"

x.p = x.p - x.gamma * x.p

elseif g == "G2" && s != "S1"

x.p = x.p - x.gamma * x.p

elseif g == "G2" && s == "S1"

x.p = x.p + x.gamma * (1 - x.p)

end

return x.p

endlearn! (generic function with 1 method)

Answer (interact!)

function interact!(x::VariationalLearner, y::VariationalLearner)

s = speak(x)

learn!(y, s)

endinteract! (generic function with 1 method)Picking random agents

rand()without arguments returns a random float between 0 and 1rand(x)with argumentxreturns a random element ofx- If we have a population of agents

pop, then we can userand(pop)to pick a random agent - This is very useful for evolving an ABM

Aside: for loops

- A

forloop is used to repeat a code block a number of times - Similar to array comprehensions; however, result is not stored in an array

for i in 1:3

println("Current number is " * string(i))

endCurrent number is 1

Current number is 2

Current number is 3A whole population

- Using a

forloop and the functions we defined above, it is now very easy to iterate or evolve a population of agents:

pop = [VariationalLearner(0.1, 0.01, 0.4, 0.1) for i in 1:1000]

for t in 1:100

x = rand(pop)

y = rand(pop)

interact!(x, y)

endExercise

Write the same thing using an array comprehension instead of a for loop.

Answer

pop = [VariationalLearner(0.1, 0.01, 0.4, 0.1) for i in 1:1000]

[interact!(rand(pop), rand(pop)) for t in 1:100]100-element Vector{Float64}:

0.099

0.099

0.099

0.099

0.099

0.099

0.099

0.099

0.099

0.099

0.099

0.099

0.099

⋮

0.09801

0.099

0.10900000000000001

0.099

0.099

0.099

0.099

0.099

0.099

0.099

0.099

0.099Next time

- Next week, we will learn how to summarize the state of an entire population

- This will allow us to track the population’s behaviour over time and hence model potential language change

- This week’s homework is all about consolidating the ideas we’ve looked at so far – the variational learner and basics of Julia