Structured populations

Agent-based modelling, Konstanz, 2024

Henri Kauhanen

14 May 2024

Plan

- So far, we have had agents interacting randomly

- We did this by:

- initializing a population,

pop, using an array comprehension - using

rand(pop)to sample random agents - using our own function

interact!to make two agents interact

- initializing a population,

- Today: using Agents.jl to work with structured populations

Positional vs. keyword arguments

- First, though, a technical remark about function arguments

- We’ve seen function calls like this:

- Here,

1:100and(1:100) .^ 2are positional argumentsseriestype = :scatterandcolor = :blueare keyword arguments

- You can swap the order of the latter but not of the former

Positional vs. keyword arguments

- To create keyword arguments in your own function, you separate the list of keyword and positional arguments with a semicolon (

;):

- If you don’t want any positional arguments, you have to write the following!

Positional vs. keyword arguments

- Keyword (but not positional) arguments can have default values:

Structured populations

- I define a population to be structured whenever speakers do not interact fully at random

- Formally:

- let \(P(X)\) = probability of sampling agent \(X\) for an interaction

- let \(P(Y \mid X)\) = (conditional) probability of sampling agent \(Y\), given that \(X\) was already sampled

- then population is structured if \(P(Y \mid X) \neq P(Y)\)

- Example: \(P(\text{D. Trump} \mid \text{Henri}) = 0\) even though \(P(\text{D. Trump}) > 0\)

Implementation

- It would be possible for us to write code for structured populations from scratch

- However, this would be more of an exercise in programming than in ABMs…

- We’re better off, here, using code written by other people

- Enter the Agents.jl package, an ecosystem/framework for ABMs in Julia

- You should already have Agents installed. If not, now’s the time to:

Agents.jl basic steps

- Decide on model space (e.g. social network)

- Define agent type(s) (using special

@agentkeyword) - Define rules that evolve the model

- Initialize your model with

AgentBasedModel - Evolve, visualize and collect data

- This may seem intimidating at first, but is really quite simple!

- Let’s walk through an example: variational learners in space

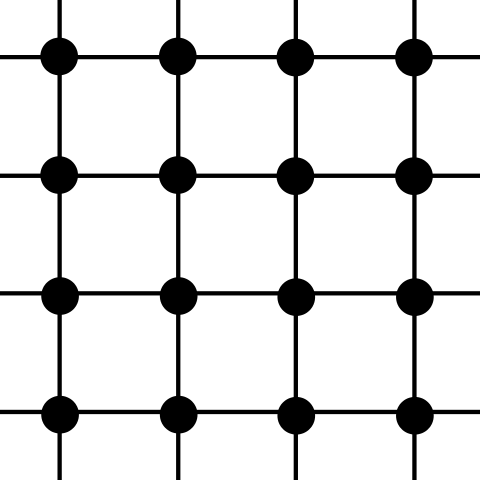

Grid space

- For this, we will reuse (with some modifications) our code for variational learners

- And assume that individual learners/agents occupy the nodes of a grid, also known as a two-dimensional regular lattice:

- Point: interactions only occur along links in this grid

Grid space: implementation

- To implement this in Julia with Agents.jl:

(50, 50)is a data structure known as a tupleGridSpaceSinglecomes from Agents.jl and defines a grid space in which each node can carry at most one agent (hence,Single)

Aside: tuples are immutable

Note

When initializing a GridSpaceSingle, you must use a tuple!

Agent redefinition

- To make use of the machinery provided by Agents.jl, we replace:

Agent redefinition

- with this:

Agent redefinition

@agentis a special “macro” (more on these later) that introduces all agents in Agents.jlGridAgent{2}instructs Agents.jl that this agent is to be used in a 2-dimensional grid space

Stepping rule

- We next need a function that evolves i.e. steps the model

- This is a function that takes a single agent and the model as arguments

- We can make use of the

interact!function we have already written:

Model initialization

- We now have all the ingredients we need to initialize the ABM:

Model initialization

- This creates a sort of an “empty” container (it has no agents yet). To add agents, we call:

VariationalLearner(1, (4, 27), 0.1, 0.01, 0.4, 0.1)- Note: the values of the agent’s internal fields (

p,gammaetc.) are specified as keyword arguments!

Model initialization

- We have a space of 50 x 50 = 2,500 nodes

- Let’s add 2,499 more agents:

- Check number of agents:

Stepping the model

- Stepping the model is now easy:

StandardABM with 2500 agents of type VariationalLearner

agents container: Dict

space: GridSpaceSingle with size (50, 50), metric=chebyshev, periodic=true

scheduler: fastest- Or, for a desired number of steps:

Stepping the model

Important

Stepping in Agents.jl is controlled by a so-called scheduler. You can decide which scheduler to use when initializing your model; for now, we will stick to the default scheduler.

This is important to know: when the default scheduler steps a model, every agent gets updated. In the case of our model, this means that every agent undergoes exactly one interaction as the “listening” party, i.e. every agent gets to learn from exactly one interaction during one time step.

Plotting the population

- With Agents.jl, we also have access to a number of functions that can be used for purposes of visualization

abmplot(model)plotsmodelin its current state. It has two return values:- the first one is the plot itself

- the second one contains metadata which we usually don’t need to care about

- To actually see the plot, you have to call the first return value explicitly:

Plotting the population

Plotting the population

- So all we get is a purple square… what gives?

- The problem is that we haven’t yet instructed

abmplothow we want our model to be visualized - We could in principle be interested in plotting various kinds of things

- For our model here, it makes sense to plot the value of

p, i.e. each learner’s internal “grammatical state” - This is done with a function that maps an agent to its

p

Plotting the population

- In this case, the function is as simple as:

Plotting the population

- We now pass our

getpfunction as the value of theagent_colorkeyword argument toabmplot:

Plotting the population

- We can see how the population changes as we evolve the model further (the new

askeyword argument specifies the size of the dots that represent the agents):

Plotting the population

- And further:

Plotting the population

- Making an animation/video allows us to visualize the evolution dynamically

- This is achieved with the

abmvideofunction

A more interesting example

- Above, we initialized the population so that everybody had

p = 0.1in the beginning - What about the following: at time \(t=0\),

- three people have

p = 1(uses \(G_1\) all the time), - every other person has

p = 0(uses \(G_2\) all the time)?

- three people have

- Will we see \(G_1\) spread across the population?

- Let’s try!

A more interesting example

- We first reinitialize the model:

A more interesting example

- Visualize initial state of population:

A more interesting example

- Animate for 1,000 iterations (every agent gets to learn 1,000 times):

Next time

- Social networks, and how to implement them in Agents.jl

- Wrapping up some technical topics: modules, abstract types and inheritance